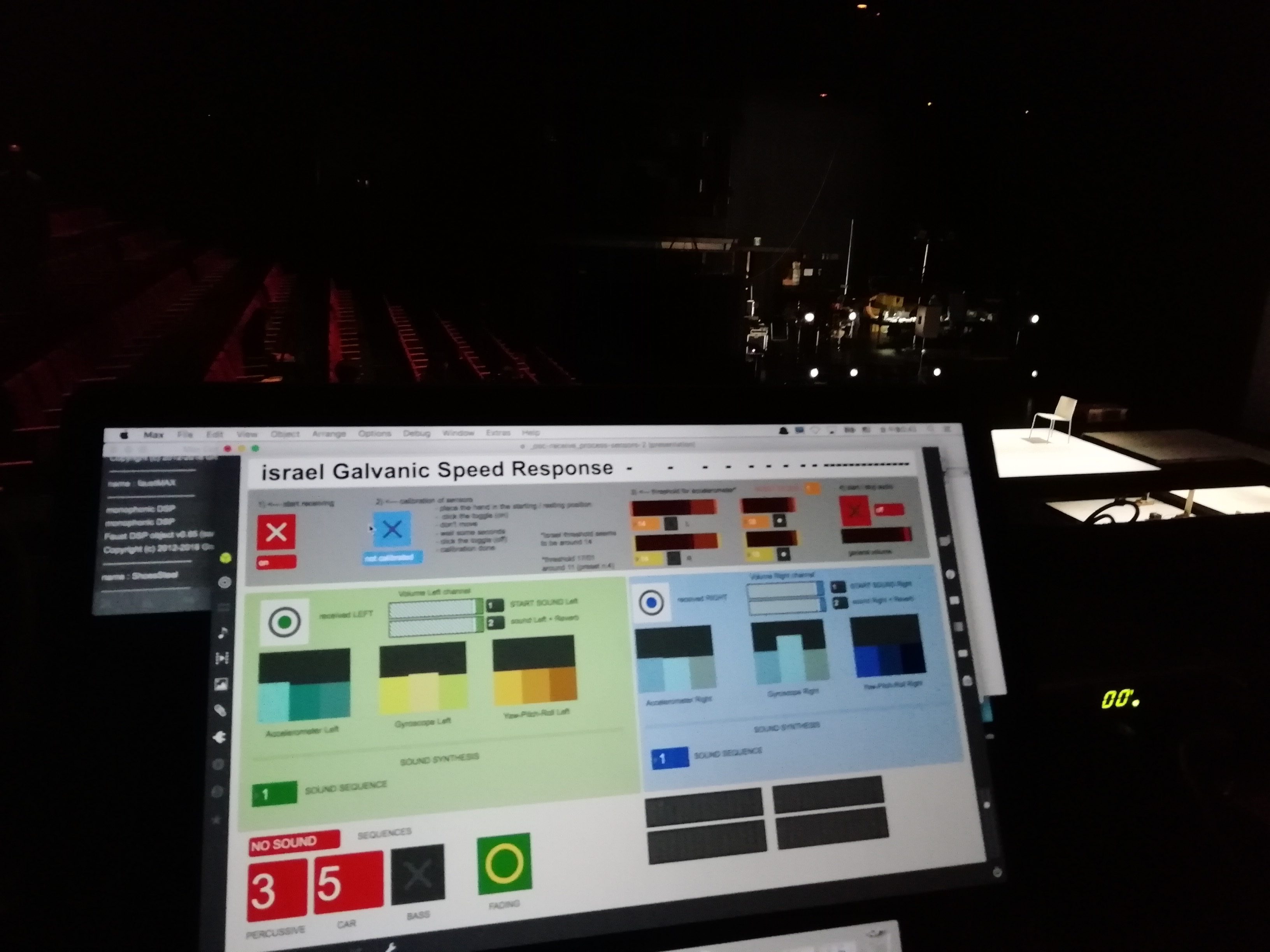

iGSR aka israel Galvanic Speed Response is a movement to sound system developed in collaboration and for Israel Galvan. It is composed of a custom-made motion sensing device (to be worn by the performer), a software for movement analysis and sound generation.

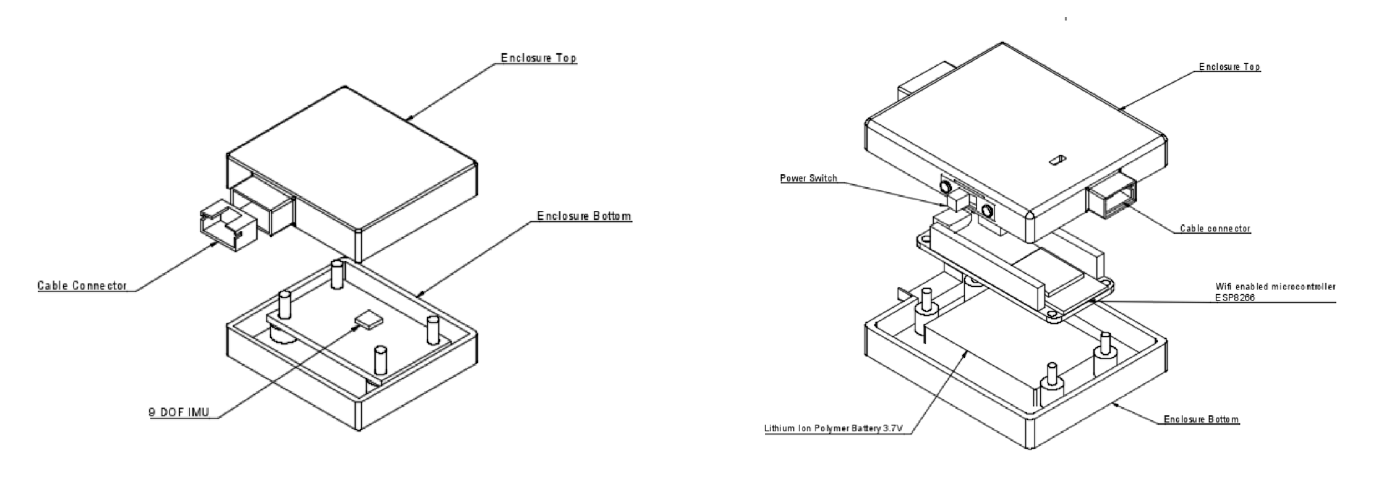

The sensing device is composed of a 10-DOF IMU and an ESP8266 Wi-fi microcontroller. The software is developed in Max-MSP, with some custom sound synthesis modules developed with Faust.

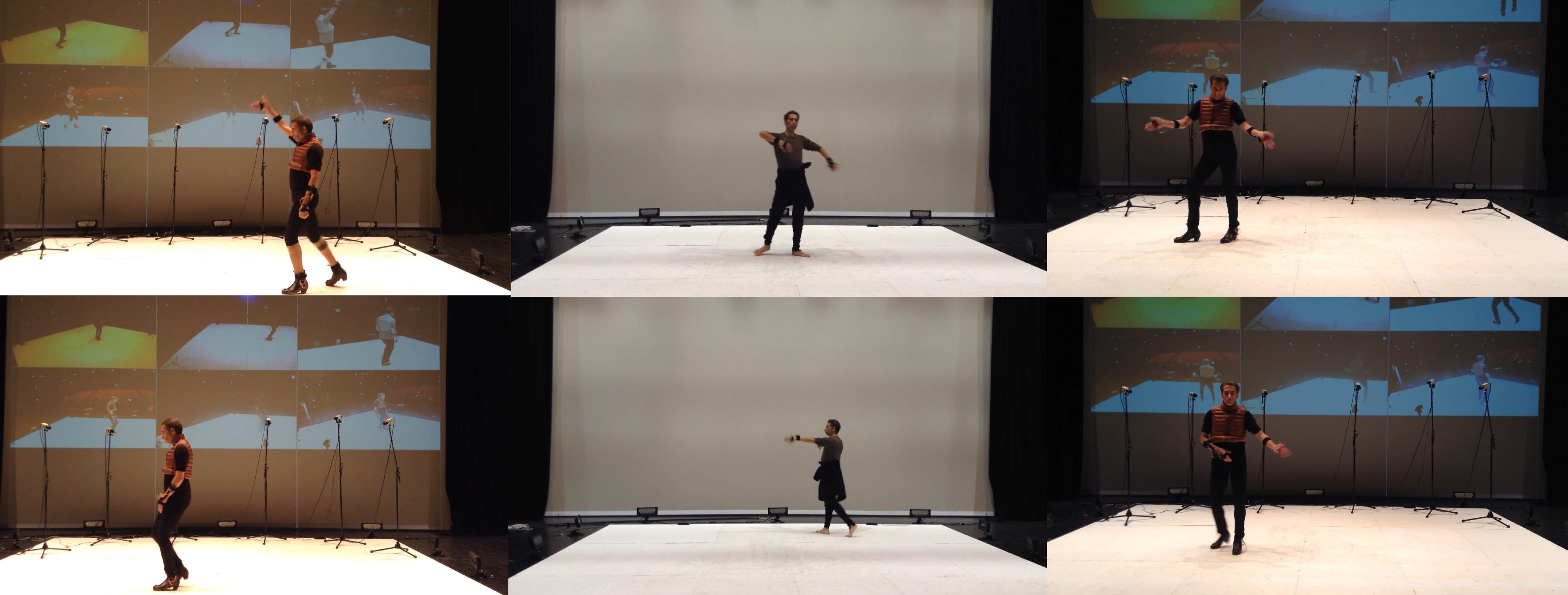

Here I have collected some of the tests we did at YCAM between 2018 and 2019 during the production and creation of the performance “Israel & イスラエル“. These are just a brief excerpt of long hours and days of working, tuning, and refining sensors, code, and choreography.

One of the most interesting elements of working with a dancer like Israel was his mix of interest in digital technologies and his distance and deep criticism. His ideas are very sharp, and he is always looking for the most direct and visceral solution. Moreover, Israel developed many performances where he incorporated different objects into his dance (like drums, piano, pebbles, and even a coffin). For this, it was very important for him to find the right way to create a dialog with the devices, but not to adapt himself to technology, but to make technology as part of his expression.

00 – Movement / Voice

One of the first tests we tried was with a system that can allow a performer to remix a recorded sample of his voice with his hand’s movements. The particularity of such a system is to keep a consistent relationship between the magnitude of the movement and the spectral characteristics of the sound sample. The dancer records his voice, then it will be loaded, analyzed and segmented by the software application, and re-synthesized based on the data (acceleration, force) produced by the smartphone held by Israel. At one point, another person joins him in a small jam session of movements and voice.

01 – Sounding Virtual Objects

In this version, I experimented with the concept of the dancer playing some virtual objects that are placed either around him (in this case behind) and/or on the dancer own body. When different virtual objects collide with each other, a sound is produced.

I – Hand / Zapateo

Israel Galvan is renowned for his virtuosic zapateado* style. For this performance, his wish was to augment his body and use and extend his technique to his hands. He expressed the desire to use his hands like his foot.

* In flamenco, zapateado also refers to a style of dancing which accents the percussive effect of the footwork (zapatear is a Spanish verb, and zapato means “shoe”). In the footwork of particular zapateado, “the dancer and the guitarists work together in unison, building from simple foot taps and bell-like guitar tones to rapid and complex steps on a repeated melodic theme. (Wikipedia)

YCAM – Creation Session III, January 2019

YCAM – Creation Session III, January 2019

II a – “El Coche”

Another theme we explored was the relationship between hand movements and generative sound. Inspired by the general theme of the performance, and the idea of a human dancing with his AI-self , we tried to investigate the idea of Israel Galvan becoming a machine, as a sort of Tetsuo-like character.

Here, the movements of Israel were passed to a custom-made sound model of a car engine, as an ideal transmission of different kinetic energies and metaphors.

The sound of a car engine especially when pushed at its limit is very rough and powerful. Apparently, this creates a very strong contrast with the usual soundscape of flamenco, but not for Israel Galvan.

YCAM – Creation Session II, November 2018

YCAM – Creation Session III, January 2019

YCAM – Creation Session III, January 2019

II b – Machine / Man

Following the previous example, we explored a sonification of the movements, like in a video-game, when a character encounters a robotic machine that when moves, cranks.

YCAM – Creation Session II, November 2018

III – Deep Freq / Body

Another test was the relation between Israel Galvan’s movement and the generation of very low frequencies. Thanks to the powerful and massive subwoofers of YCAM Studio A, the subtle movements of Israel can be transmitted to the audience through a sound pressure that makes the entire theater shaking.

YCAM – Creation Session III, January 2019

Test IV – Light / Body

Just a simple test with movement, sounds, and light system (developed by Satoru Higa, Fumie Takahara, Yohei Miura).

YCAM – Creation Session III, January 2019

Prototypes

The first experimentations have been made by using the built-in sensors in a consumer, Android-based smartphone. Together with several software prototypes, we used such configuration for the first creation session done in 2018.

Then, I developed the first prototype of the wearable device and the software application. The device was designed to be small, light, and flexible. Flexible because we had the need to understand how and in which part of the arm and hand, the dancer was going to wear the sensing device. I developed two elements (one for the sensors and one for the microcontroller and the battery) that can be easily reconfigured in different ways by using cables of different lengths. Together with a more complete and easy to use Max-MSP patch (which included several types of motion analysis, sensors’ filtering, sound synthesis, and seven types movement-sound relations), we shipped the device to Spain. This allowed Israel and his team to evaluate them, test them, and provide feedback to us.

The last version of the device was developed together with Richi Owaki from YCAM InterLab. We shrank the components in a very compact and single case. Very light, easy to wear, and stable.

And then, it got on stage. Pay attention to the crazy outfit of Israel.